regularization machine learning meaning

It will affect the efficiency of the model. Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself.

Regularization In Machine Learning Simplilearn

Regularization helps us predict a Model which helps us tackle the Bias of the training data.

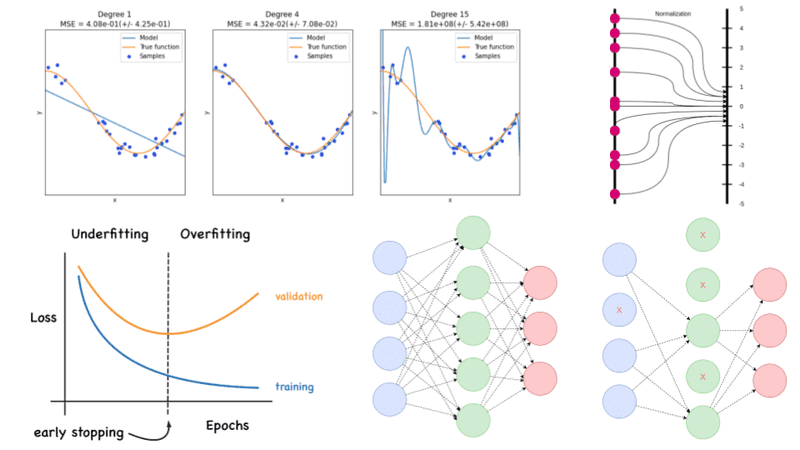

. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. Of course the fancy definition and complicated terminologies are of little worth to a complete beginner. Mainly there are two types of regularization techniques which are given below.

The model will not be. As a result the tuning parameter determines the impact on bias and variance in the regularization procedures discussed above. There are mainly two types of regularization.

Produce better results on the test set. How well a model fits training data determines how well it performs on unseen data. Why Regularization in Machine Learning.

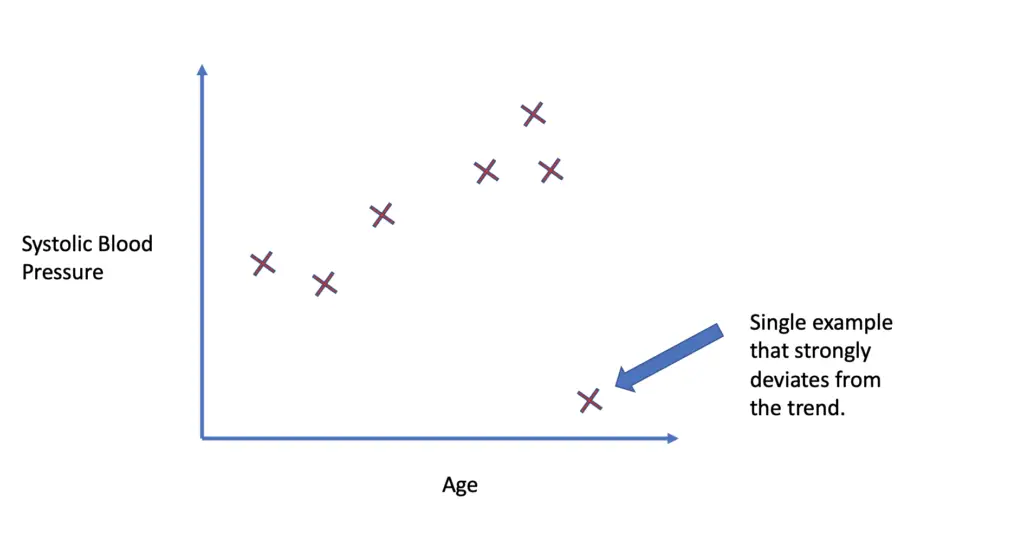

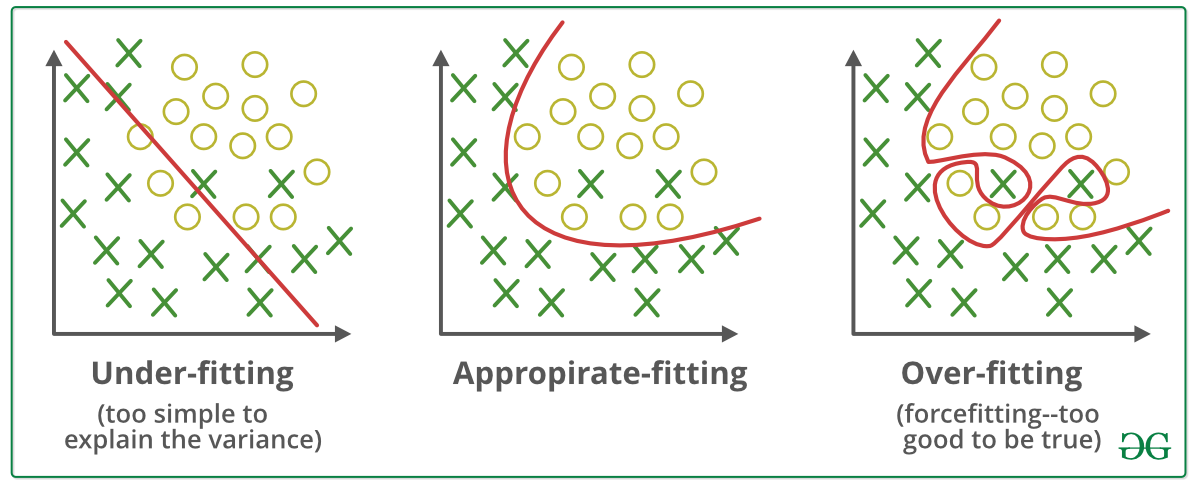

Regularization in Machine Learning greatly reduces the models variance without significantly increasing its bias. This is where regularization comes into the picture which shrinks or regularizes these learned estimates towards zero by adding a loss function with optimizing parameters to make a model that can predict the accurate value of Y. Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data.

In other words it causes extra bias error to be added to the model. Regularization refers to the collection of techniques used to tune machine learning models by minimizing an adjusted loss function to prevent overfitting. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting. Regularization is any supplementary technique that aims at making the model generalize better ie. To understand the importance of regularization particularly in the machine learning domain let us consider two extreme cases.

Regularization in Machine Learning. However we have the knowledge that the performance of the model can be increased by applying certain improvement methods These methods are called as regularization methods. Regularization is one of the most important concepts of machine learning.

It is a form of regression that constrains or shrinks the coefficient estimating towards zero. The cheat sheet below summarizes different regularization methods. As the value of the tuning parameter increases the value of the coefficients decreases lowering the variance.

We already discussed the overfitting problem of a machine-learning model which makes the model inaccurate predictions. Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. Using regularization we are simplifying our model to an appropriate level such that it can generalize to unseen test data.

Generally regularization means making things acceptable and regular. The model performs well with the training data but not with the test data. We can say that regularization prevents the model overfitting problem by adding some more information into it.

The ways to go about it can be different can be measuring a loss function and then iterating over. This might at first seem too general to be useful but the authors also provide a taxonomy to make sense of the wealth of regularization approaches that this definition encompasses. Regularization intends to add extra term to the cost function of target ML or DL model.

What this additional term exactly does is to prevent optimization algorithms such as gradient descent from reaching the weight values minimizing the bias error. Regularization in Machine Learning What is Regularization. In machine learning regularization problems impose an additional penalty on the cost function.

It is a technique to prevent the model from overfitting by adding extra information to it. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. Regularization is the answer to the overfitting problem.

An underfit model and an overfit model. It means that the model is unable to anticipate the outcome when dealing with unknown. One of the most fundamental topics in machine learning is regularization.

Its a method of preventing the model from overfitting by providing additional data. Regularization techniques are used to increase performance by preventing overfitting in the designed model. Therefore regularization in machine learning involves adjusting these coefficients by changing their magnitude and shrinking to enforce generalization.

Regularization is a technique which is used to solve the overfitting problem of the machine learning models. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. The major concern while training your neural network or any machine learning model is to avoid overfitting.

It means the model is not able to.

What Is Regularizaton In Machine Learning

What Is Overfitting In Machine Learning And How To Avoid It

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Regularization In Machine Learning Programmathically

What Is Regularizaton In Machine Learning

A Tour Of Machine Learning Algorithms

What Is Regularizaton In Machine Learning

Regularization Techniques For Training Deep Neural Networks Ai Summer

What Is Regularizaton In Machine Learning

Tensorflow Quantum Boosts Quantum Computer Hardware Performance Artificialintelligence Machinelearning A Quantum Computer Computer Hardware Algorithm Design

Regularization In Machine Learning Geeksforgeeks

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Simplilearn

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Mindspore Architecture And Mindspore Design Huawei Enterprise Support Community Meta Learning Enterprise Data Structures

What Is Regularization In Machine Learning Techniques Methods